Data Visualization and Exploration

Histograms, Densities, and Tables

Introduction

Announcements

Discuss slow rollout of “semester project”.

Break upload files to D2L

- Ensure your main files are named according to the convention

lastname-firstname-wk2<ext> - Use your computer or downloaded utility to create a

.zipfile of the main directory - Upload the

.zipfile (formatlastname-firstname-wk2.zip) to the relevant D2L Assignments folder.

** There was no assignment from Week 3. “Sorry.”/“You are welcome.”**

Histograms and Densities

This is more difficult than it may seem.

- but more from a conceptual (“how”) perspective, and

- less form a technical (implementation) perspective

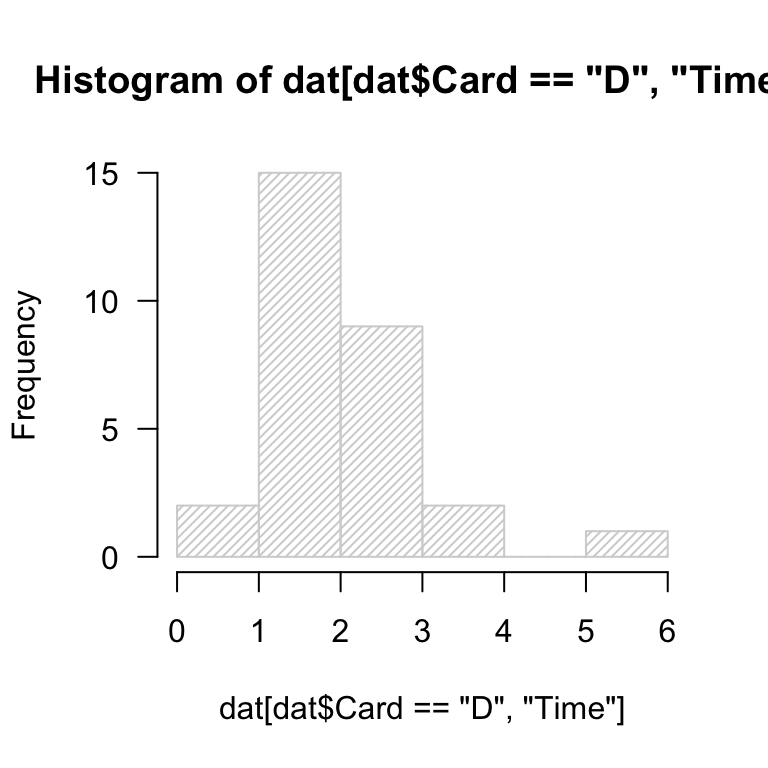

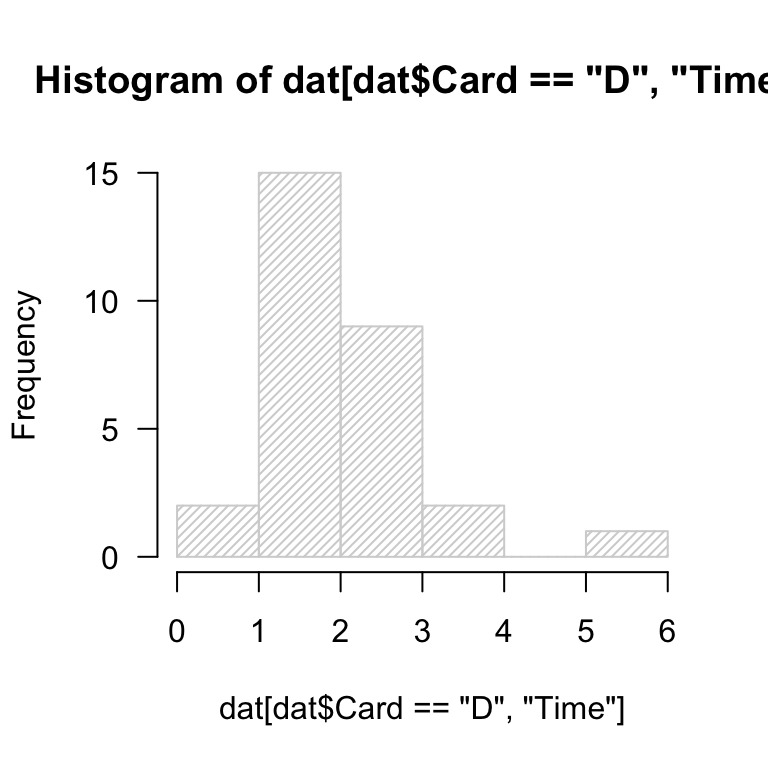

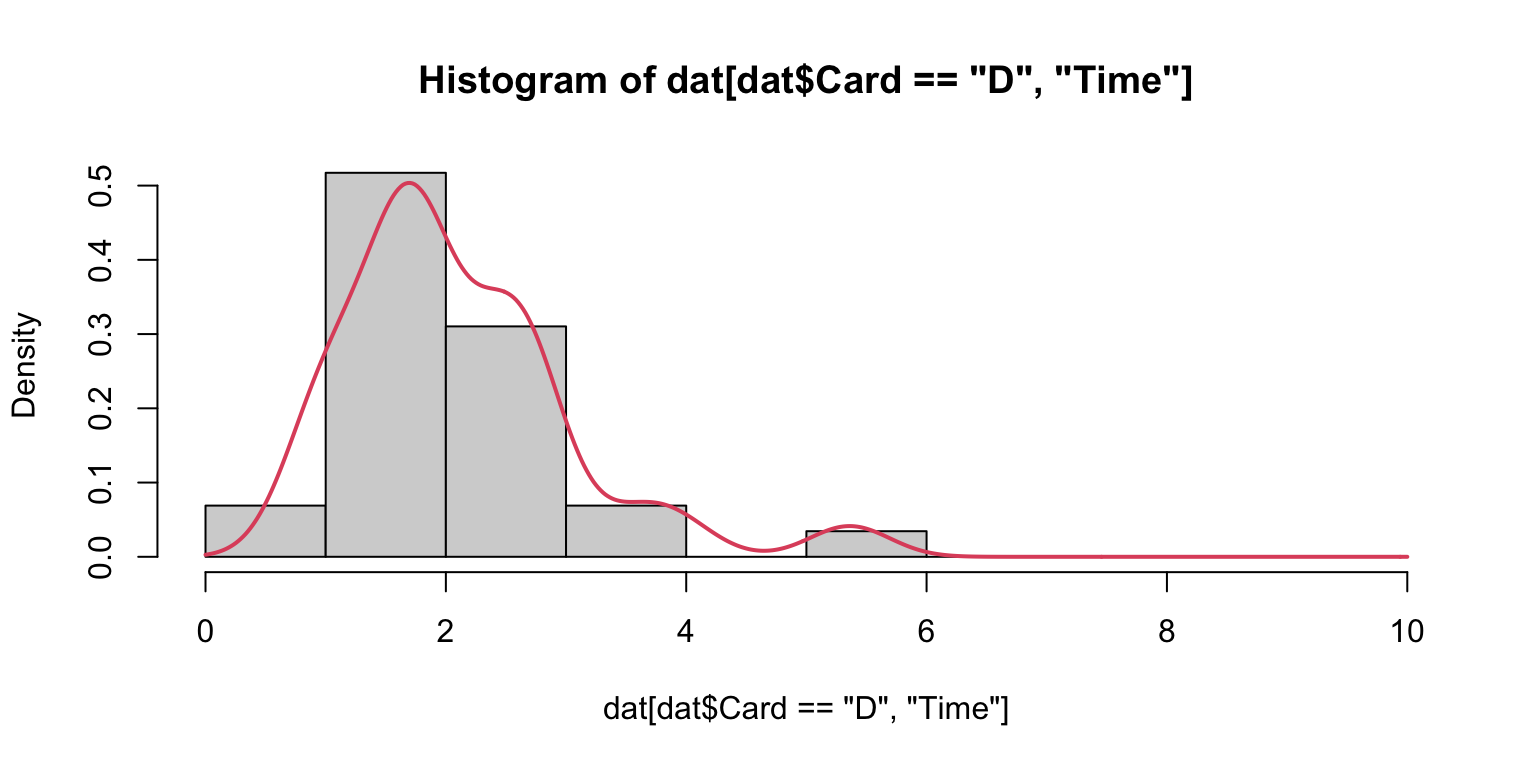

Histograms

Read in the data cards.csv.

- generate the default histogram of

Timevalues for theCard == "D"subset.

- experiment with

breaks = ...to set the number of “bars”.

Adding content

Layer on data from any other card (A-C) by using add = T and specifying a color.

What do you notice about the rendering of the histograms? Are you satisfied?

Layering many histograms

In general, this makes more than a couple histograms very difficult to read and interpret.

It is recommended that you use filled density plots to compare multiple distributions.

More on that later.

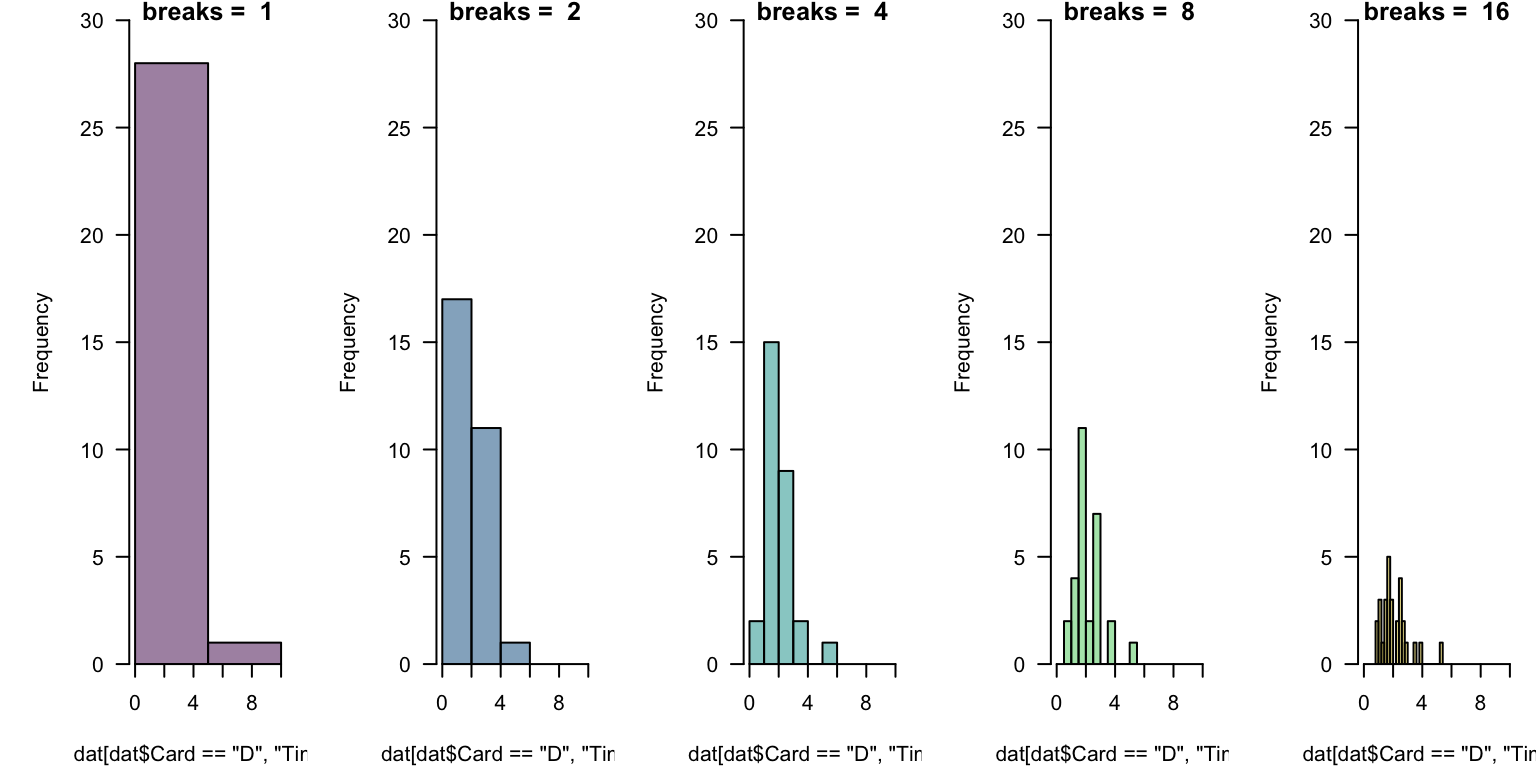

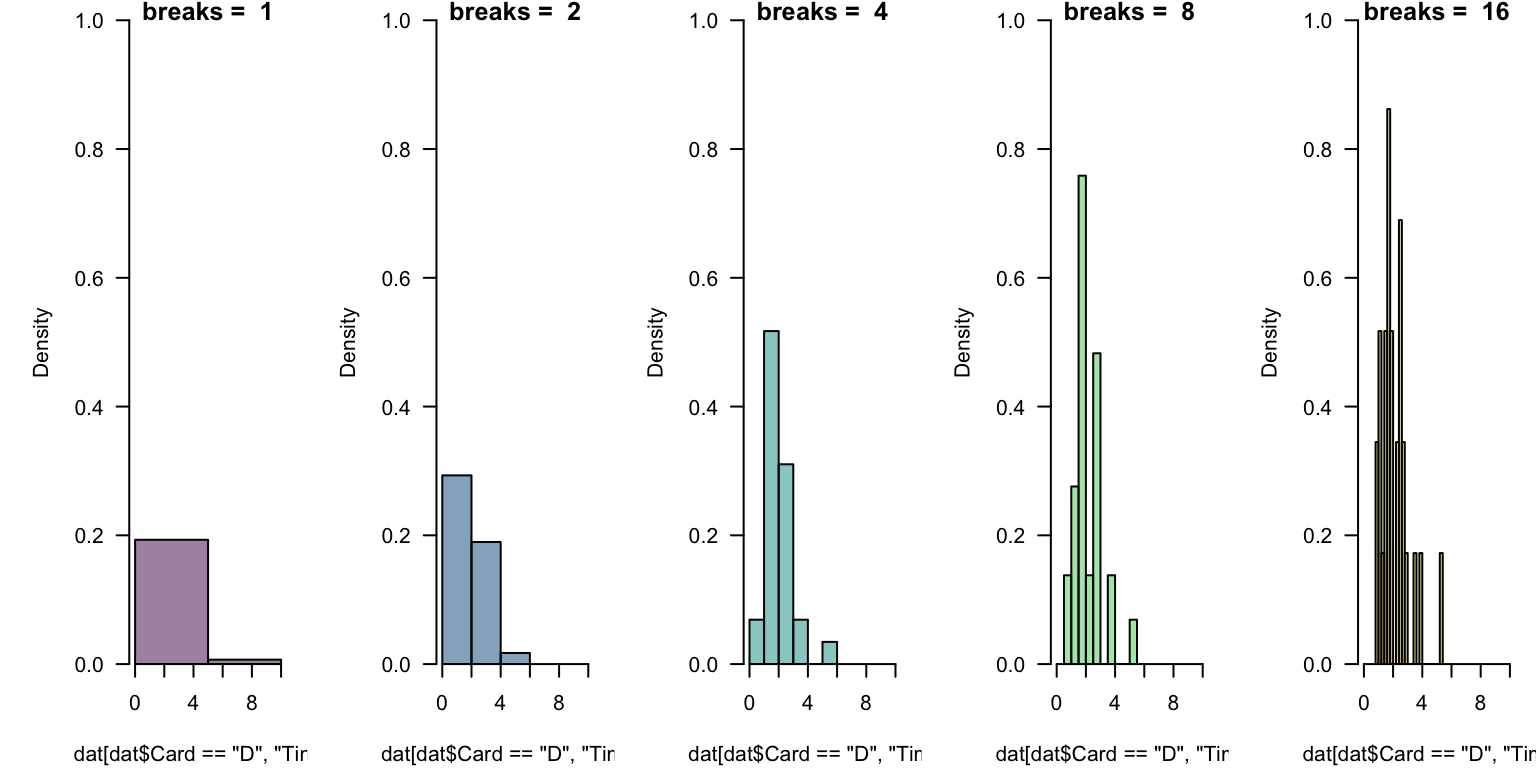

Deep challenges

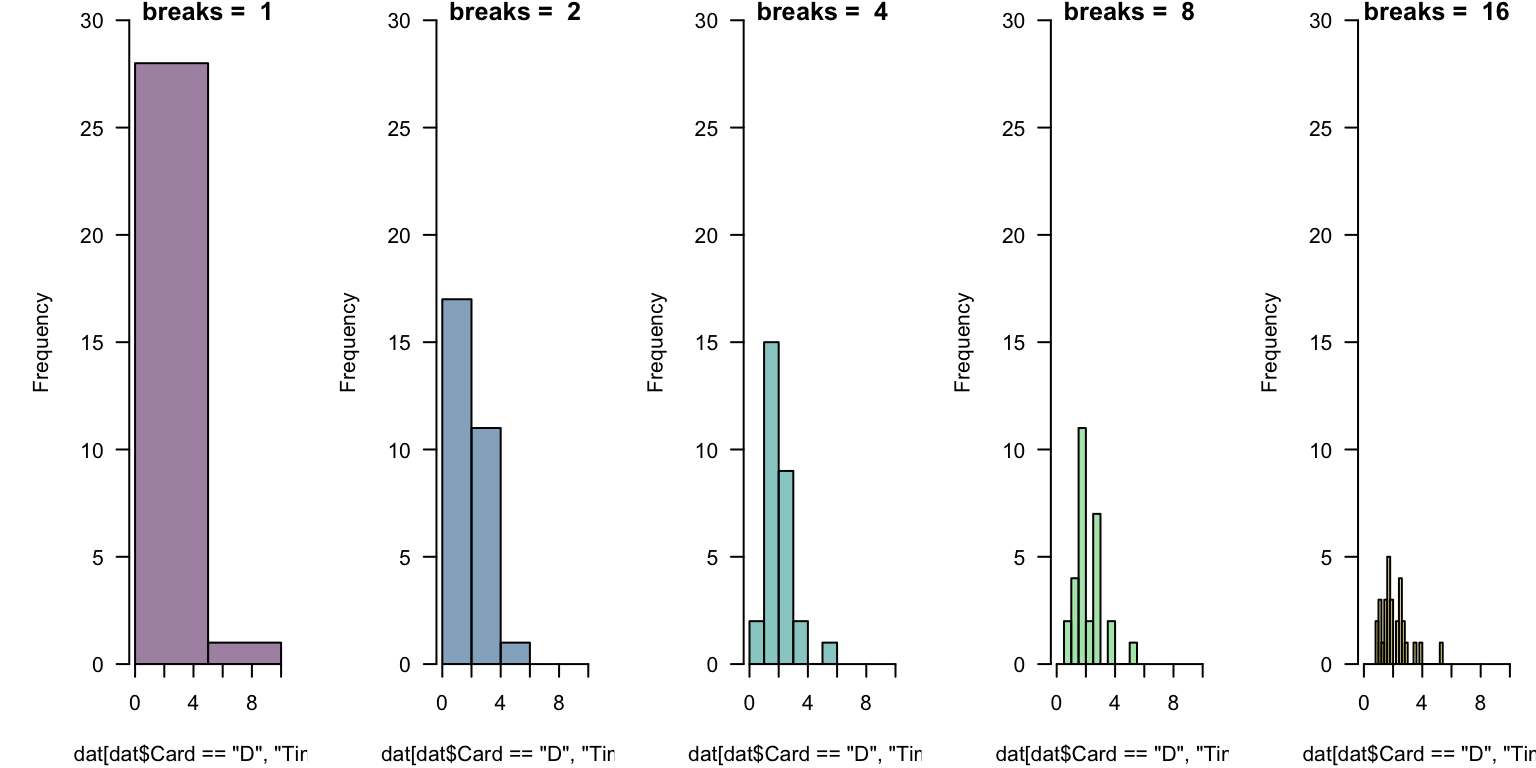

In general, there is no “right number” of “bins” (or “cells”) to display.

This takes experimentation. Which below is “best”? What might help you decide?

Challenges Revisited

This takes experimentation. Which below is “best”?

Reflection

Parameterizing breaks

The breaks = ... is quite flexible, but works in occasionally mysterious ways.

One argument breaks = n passes that number to an underlying function and asks for n + 1 points that delimit n bins.

One nice options is to define equally-spaced ranges using the colon operator or seq(from = ..., to = ..., length = ...).

Challenge breaks

Using any subset of the card data, experiment with various ways of implementing breaks discussed previously.

Comment on what this “tells you” about the data or how it might help you “tell others”!

Motivation for histograms

A great deal of statistical practice is related to assumptions of “normality”.

Histograms are a classic tool for the visual assessment of normality and can give evidence of other properties.

Use the code below to generate 100 sampled values from a normal distribution with mean \(\mu = 2\) and standard deviation \(\sigma = 1\).

Then, add a histogram. Finally, repeat the sampling process and histogram visualization.

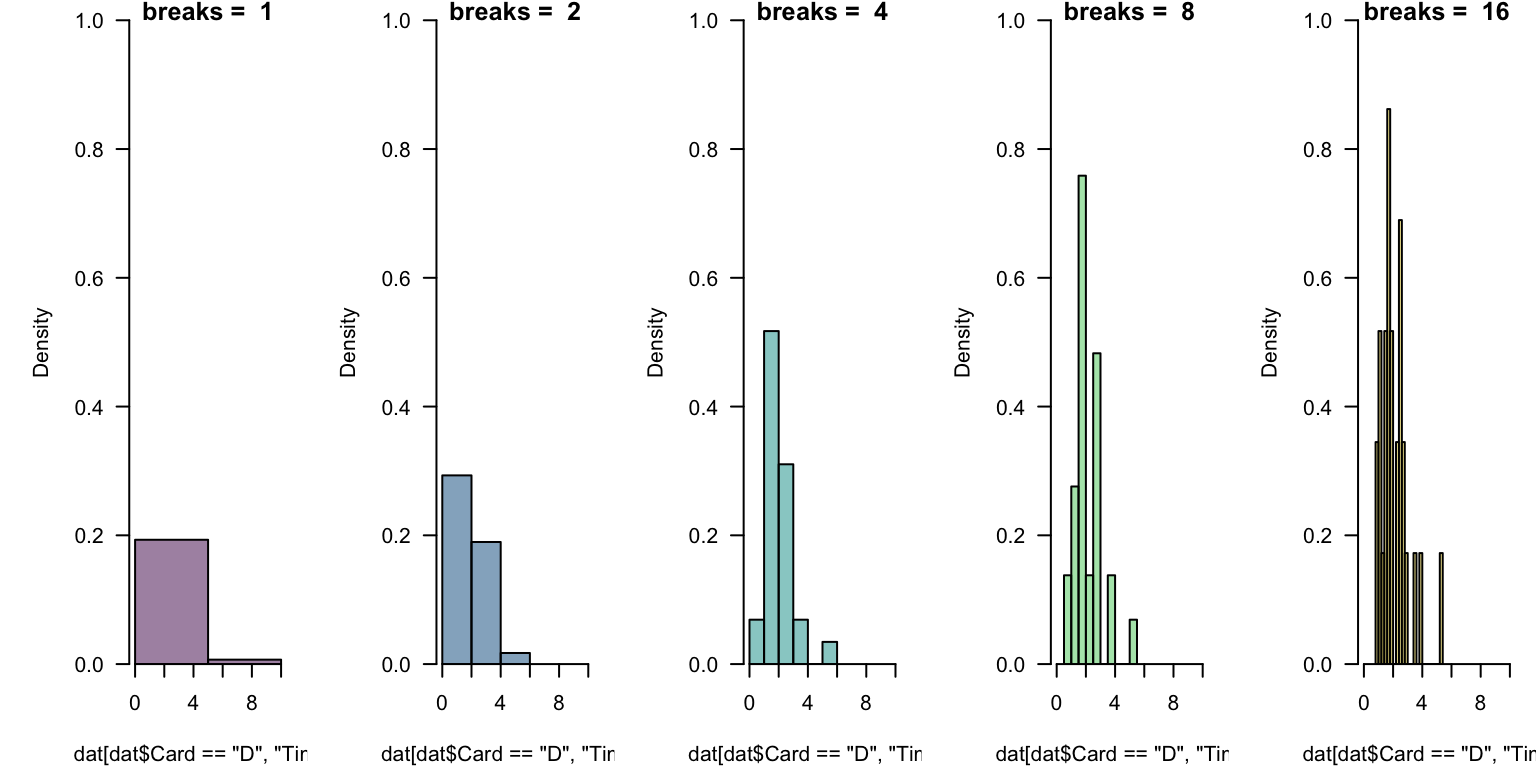

Transforming data

Generate the following data and make a histogram.

Now, make separate histograms of log10(rn) and log(rn).

What if anything do you notice?

Details, details

It really doesn’t matter which logarithm you use in transformation, each has merits.

- it is generally easier for people to think (i.e., read an axis), written in powers of 10

- it is mathematically nice to use the natural logarithm

Label your axis carefully.

Today is the 35th day of 2025. Notice \(\ln(35) \approx 3.555\), and \(\log(35) \approx 1.544\).

These mean that \(e^{3.555} \approx 35\) and \(10^{1.544} \approx 35\).

- Both are fine ways to say “\(35\)”.

- They differ by a “change of base”.

One implication is that if we report “log”-transformed data, we need to be specific about which base.

Log-transformed data

Using a base-10 logarithm, transform the “lognormal” data and generate a histogram.

What has changed?

Weibull distribution “shape” parameter

Separately, generate random values and histograms for shape = 1/2, 1, 2.

Try to assess the role of shape.

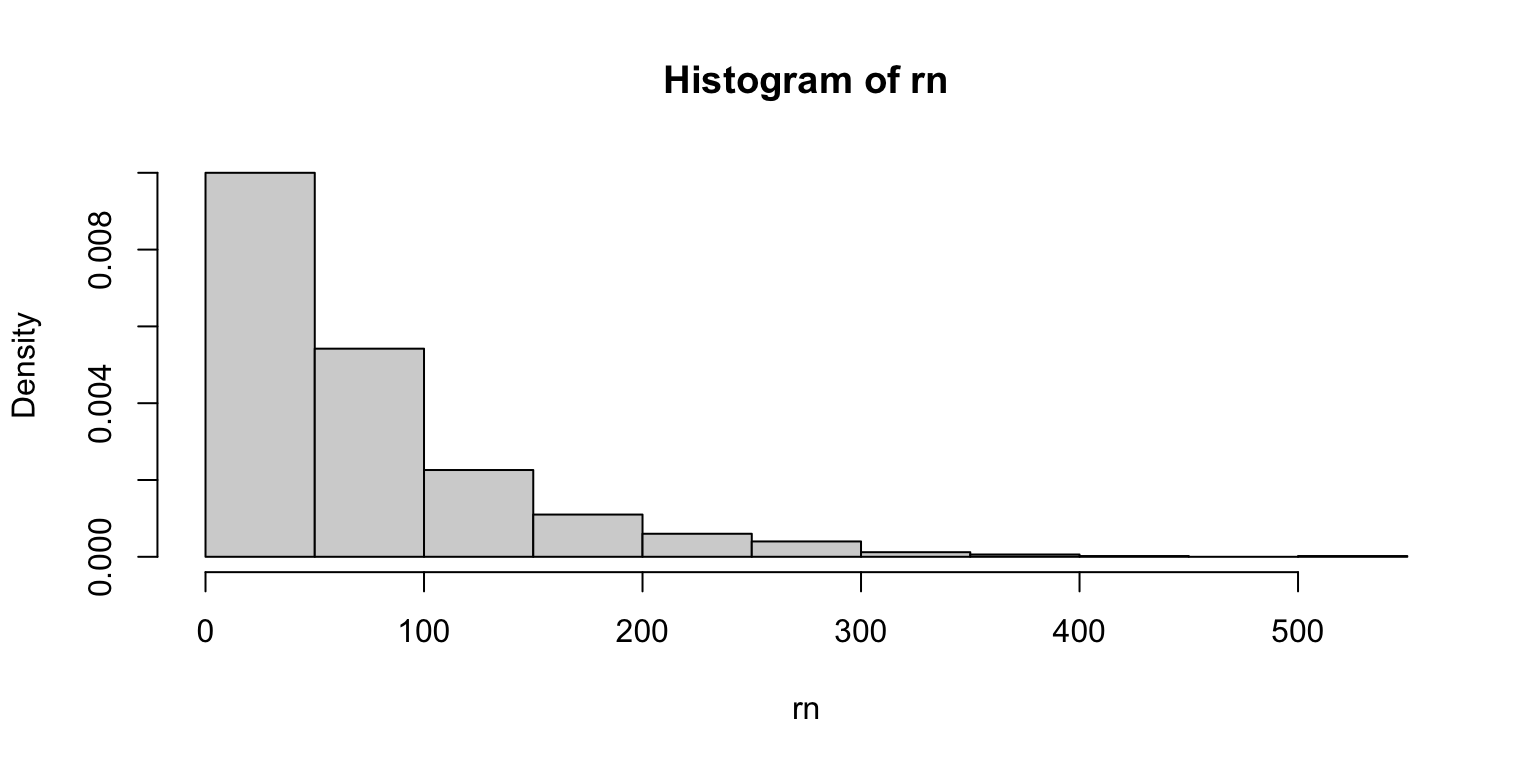

Histograms and densities

A common visual “test” of data properties is to overlay a density upon its histogram.

Histograms and densities

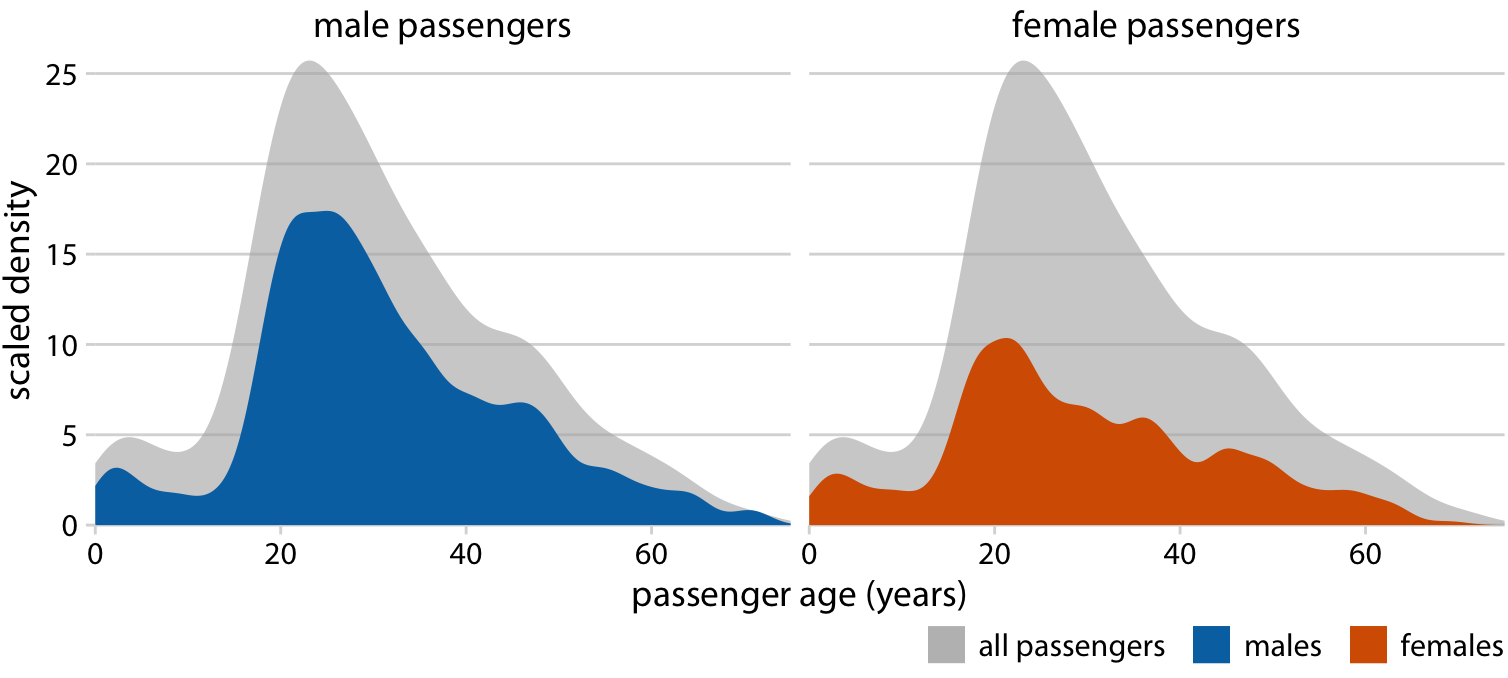

As mentioned, it is “best” to layer filled-density plots, rather than histograms.

That said, just like hist() has a variety of defaults choices, so does density().

Experiment with density(..., bw = ...) by choosing values for the “bandwidth”. Use your experiments to learn about what this argument does.

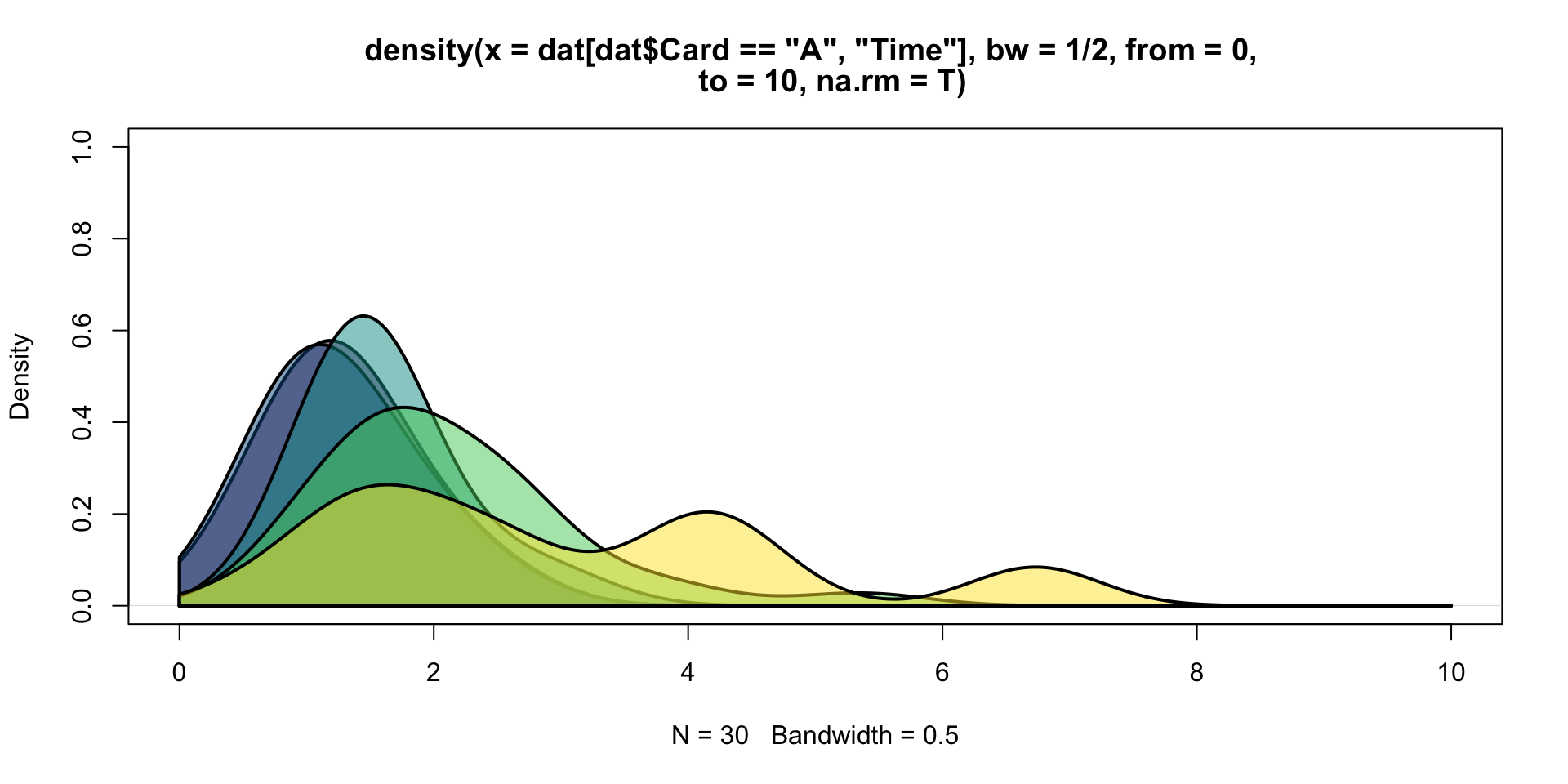

Layering densities

cols <- hcl.colors(5, alpha = 0.5)

plot(density(dat[dat$Card=="A", "Time"], from = 0, to = 10, na.rm = T,

bw = 1/2), col = cols[1], lwd = 2, ylim = c(0, 1))

for(i in 1:5){

den <- density(dat[dat$Card==LETTERS[i], "Time"], from = 0, to = 10,

na.rm = T, bw = 1/2)

polygon(c(den$x, rev(den$x)), c(0*den$y, rev(den$y)), col = cols[i], lwd = 2)

}Applications of layered densities

Tables

Have we made it?

- I hope so, but if not we will soon.

- Even within “base R”, there are a variety of useful data-exploring commands.

To be clear, tables are a form of data visualization.

These are someone analagous to MS Excel “Pivot Tables”.

Generating tabular displays (I)

Within “base R”, personal favorites are table() and aggregate().

It is often useful to assign these tables to a temporary variable such as tab or tmp.

Generating tabular displays (II)

Alternate “model” syntax is possible!

Merging and renaming

We can use a related command merge() to combine multiple aggregated values and names() to give it some organization.